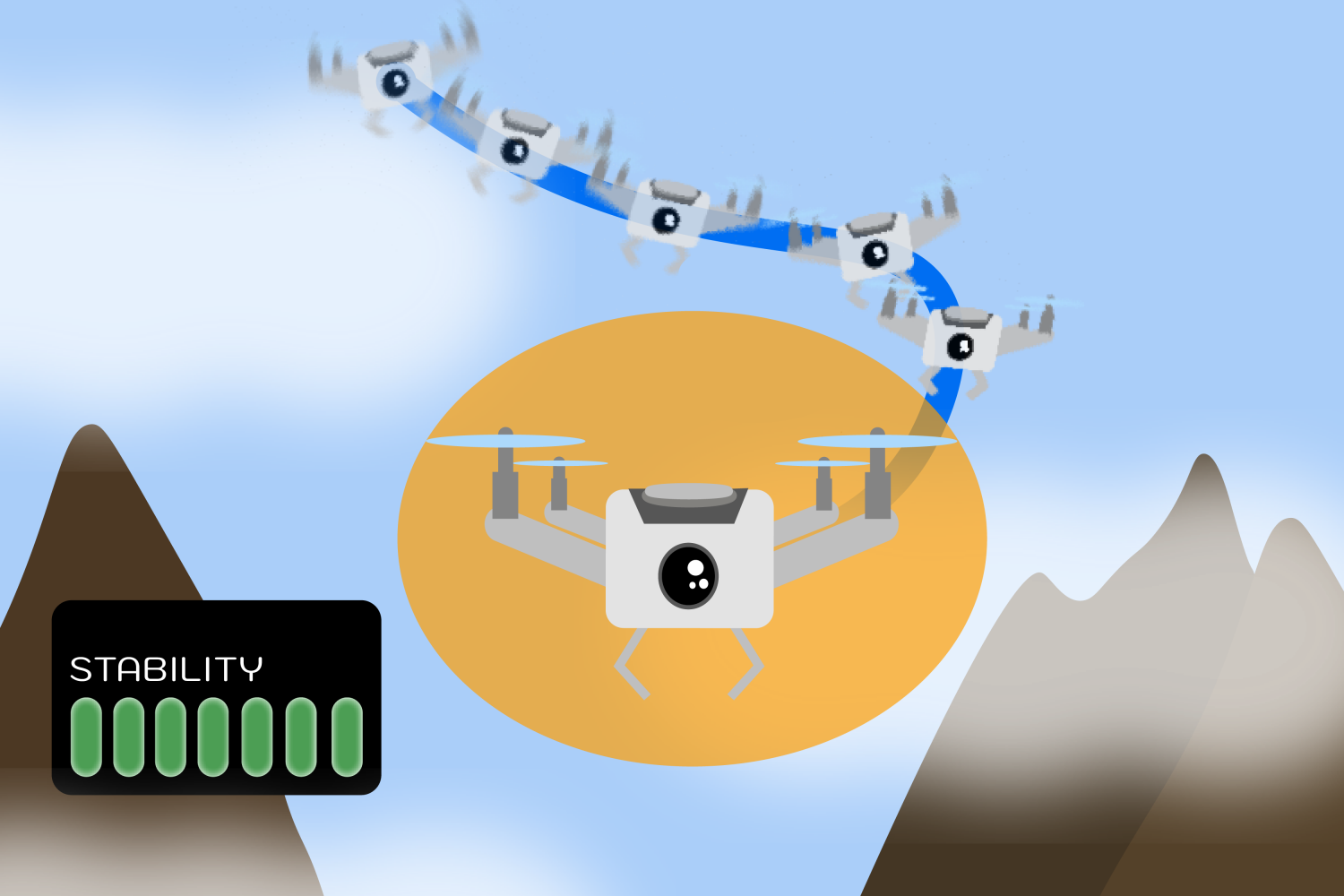

Neural networks have made a seismic impact on how engineers design controllers for robots, catalyzing more adaptive and efficient machines. Still, these brain-like machine-learning systems are a double-edged sword: Their complexity makes them powerful, but it also makes it difficult to guarantee that a robot powered by a neural network will safely accomplish its task.The traditional way to verify safety and stability is through techniques called Lyapunov functions. If you can find a Lyapunov function whose value consistently decreases, then you can know that unsafe or unstable situations associated with…