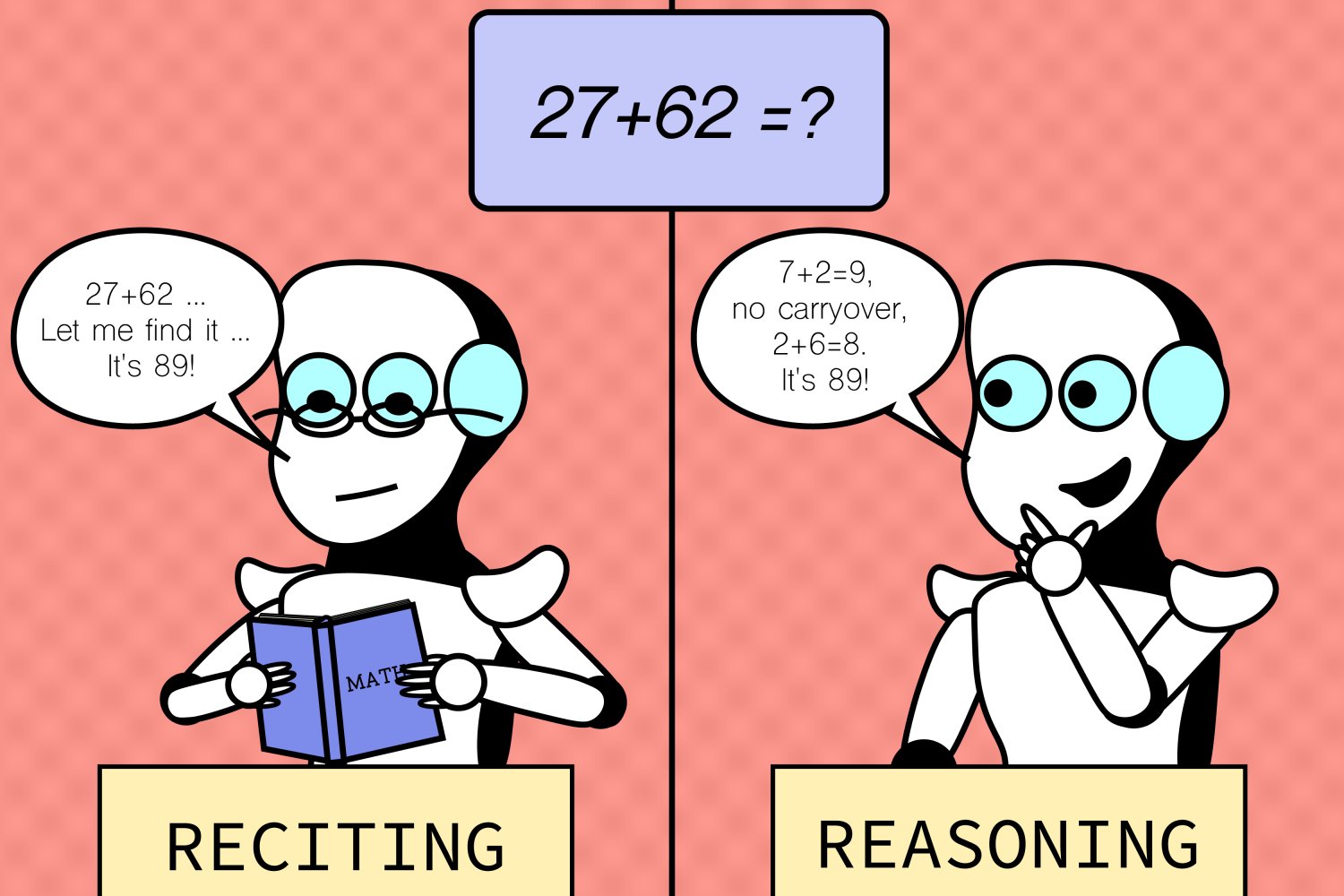

When it comes to artificial intelligence, appearances can be deceiving. The mystery surrounding the inner workings of large language models (LLMs) stems from their vast size, complex training methods, hard-to-predict behaviors, and elusive interpretability.MIT’s Computer Science and Artificial Intelligence Laboratory (CSAIL) researchers recently peered into the proverbial magnifying glass to examine how LLMs fare with variations of different tasks, revealing intriguing insights into the interplay between memorization and reasoning skills. It turns out that their reasoning abilities are often overestimated.The study compared “default tasks,” the common tasks a model is…